During the 10 plus years Thoughtworks has been in the Continuous Delivery (CD) ecosystem, we’ve regularly come across people wanting to try our tools (GoCD and Snap CI) as they start their journey toward CD. Very often, in attempting to support teams new to CD, we suggest that they pause any tool evaluation and consider whether their organization is actually ready to embark on this journey. If you do not frankly assess your team’s readiness, the result can be a massive failure. The path to CD should not start with the immediate adoption of a CD tool.

In part one of this series, we explored some core development practices that are prerequisites for CD. In this part, we’ll look at a variety of feedback loops—both manual and automated—your organization should have in place before rolling out CD.

Feedback loops and Continuous Delivery

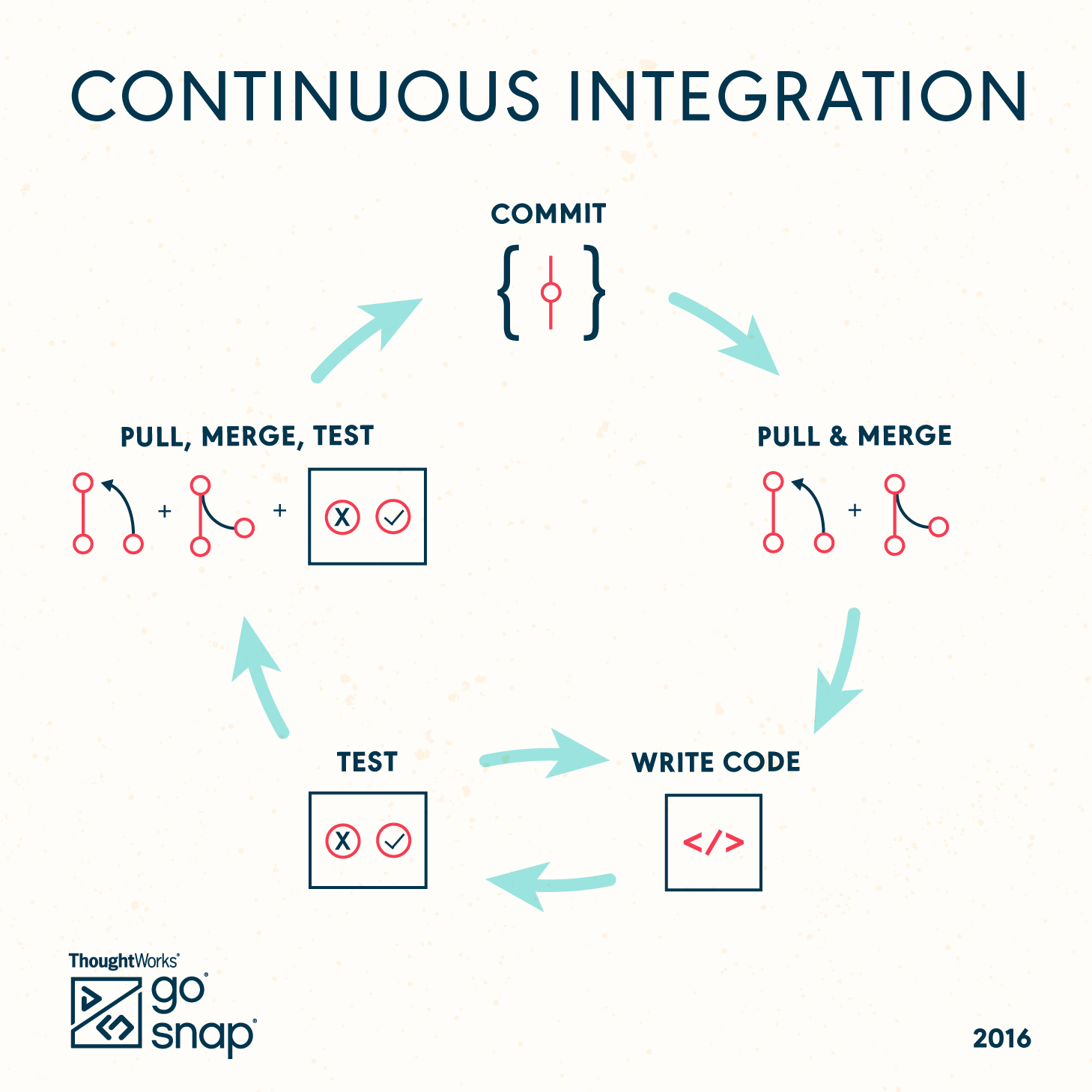

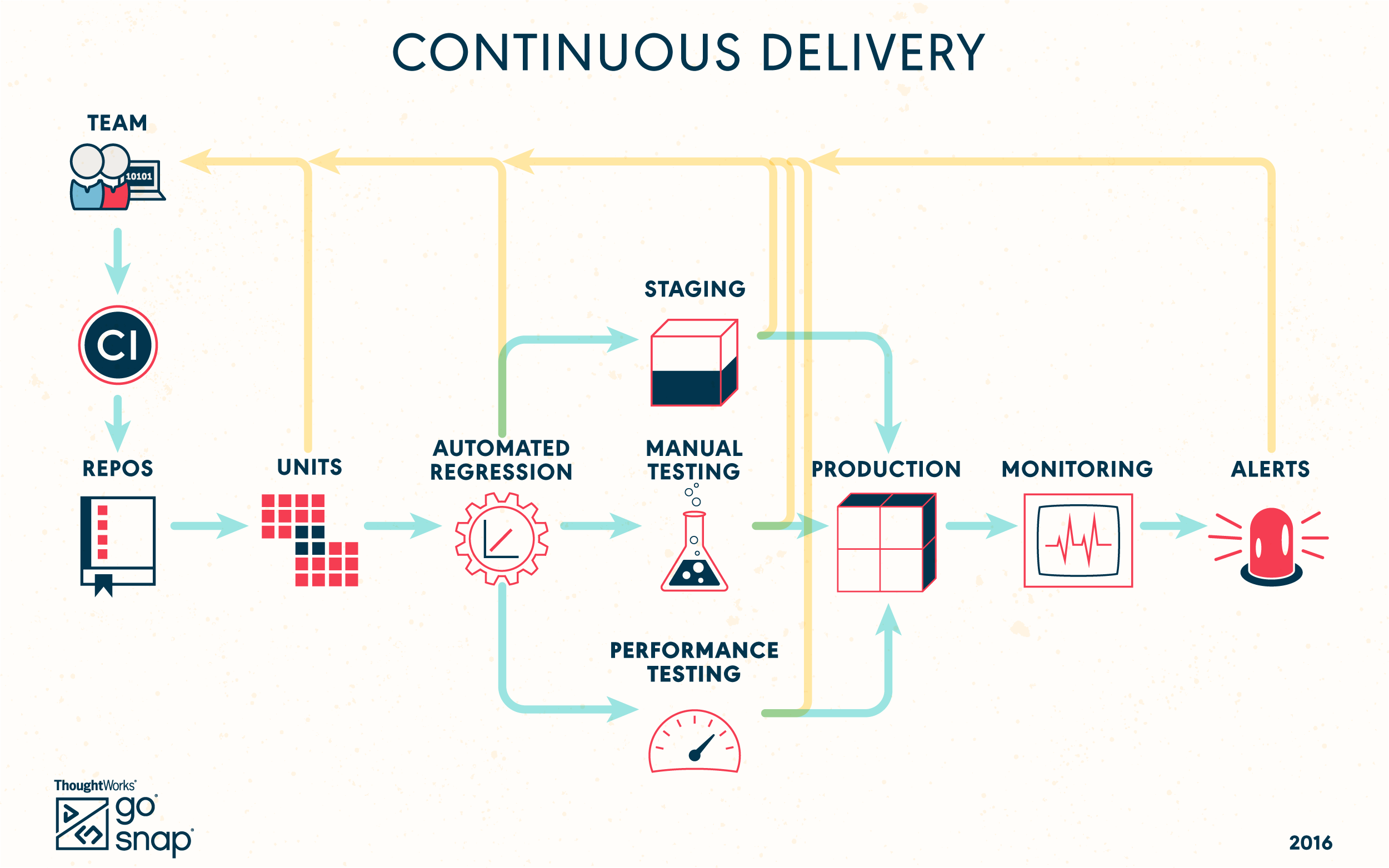

The aim of Continuous Delivery is to release software faster, more reliably, and more frequently. Given that, diagrams of CD typically depict a linear flow. On the surface, this is quite different from Continuous Integration, which is usually shown as a loop.

But CD as a linear flow is an incomplete picture. A good deployment pipeline has numerous feedback loops along the way. At each stage of the pipeline, verifications are run. If they pass, the pipeline continues. If they fail, the pipeline halts and the team responds appropriately to the feedback. The feedback along the way prevents CD from being chaos. Poor quality will almost never reach production in a well-designed pipeline.

Most of the feedback loops you find in a deployment pipeline are good practices in and of themselves. You might already be doing some or most of them. We think you should have many of these in place before moving forward with CD.

Test automation

The most common feedback loop in any deployment pipeline is the execution of automated tests. You must have a solid test automation strategy before attempting CD. Some people like the approach of the test pyramid. We’re actually fine with any sensible approach, as long as it’s fast and reliable. There are myriad types of automated tests, and which ones you use will depend upon your circumstances. Here, we will take a look at three of the most important types: unit, regression, and performance.

Unit tests

Unit tests verify your application at the most granular level, typically methods or classes. They are fast, easy to maintain, and support rapid change of your application. Unit tests should be the foundation of your automation strategy. If your teams don’t value a thorough and fast unit test suite, they won’t be able to move fast or with confidence.

Some points to consider when assessing your team:

- The suite must be fast. What’s fast? A few minutes on a large code base is OK. But faster is better. Slow unit tests result in a slow, horribly frustrating development flow.

- On a mature team, the testers will be comfortable with pushing as much of your test automation as possible into your unit test layer.

- Code coverage is important, but tracking metrics is generally only beneficial for a team learning the basics.

- Some frameworks and platforms are known to be slow when it comes to unit tests. Do not fight or subvert a framework to make tests fast. Instead, consider switching your framework or platform.

Regression test suite

A regression test suite verifies that your entire application actually works. This suite adds a ton of value to a deployment pipeline. For many, the regression stage of a pipeline gives the confidence needed to deploy. We want to make a couple of points about this.

Firstly, regression tests should be 100 percent automated. They are change-detectors and do not require brain power to execute. A manual regression stage in your deployment process will prove painful. Work to get rid of it. Your testers can add more value elsewhere.

Secondly, we reject the notion that a regression suite must mean slow, flaky Selenium tests. Our take is, yes, it’s a fair reputation, but it was earned by many teams doing it wrong. How to author and maintain an automated regression suite is a book-worthy topic, but quickly:

- Don’t couple them to small stories or tasks. Only consider them in the context of the entire application.

- Relentlessly prune the suite. Keep it tight. Err on the side of leaving something uncovered rather than accepting duplication.

- Treat them as production code. Keep things very clean.

- Have programmers write them. Train your testers to code if they are interested in automation. Avoid drag-and-drop programming.

- Do not accept flaky tests. Fix them or get rid of them.

- Even the best suites we’ve seen tend to be slow. Embrace using some combination of hardware, virtualization, and cloud to parallelize execution.

One caveat to note: the best testers will want to do a manual regression every so often, just to help structure how they think about the application. That’s a good thing so long as it’s about their being thoughtful and not how you actually integrate regression checks into your process.

Performance testing

Performance testing—verifying that your application meets specific performance criteria—is a massive topic. There’s no one way to do it: your approach will vary according to request volume and data size. There are many varieties: load, stress, soak, and spike, to name a few. It’s too big a topic for this post. That said, we do have some thinking that can help you assess your maturity:

- Do not leave this phase for last. We cannot stress enough just how difficult this practice is and how much time we’ve seen sunk into failed efforts. Everything about it is difficult: modeling, standing up an environment, building the harness, assessing results, building it into your deployment pipeline.

- It’s critical to test against specific criteria. Don’t worry about getting your criteria wrong at first. You can change the criteria once you have real production data.

- Don’t assume you’ll reach web-scale in month two. You’ll waste huge cycles prematurely optimizing both your application and your tests. (Don’t read this as us saying, “Don’t consider what your actual scale might be.” Just a suggestion that you be realistic and pragmatic.)

- Utilize production monitoring to the greatest extent possible. A canary release can go a long way toward verifying the performance of a new version of your application.

Production monitoring

Do you have a production monitoring strategy? Feedback loops aren’t only for pre-production phases. As much as we try to achieve dev/prod parity, production is truly a unique environment for most organizations and things can—and do—go wrong.

Here are some questions to help you assess your readiness:

- How quickly does your team know something is broken? Do they learn about it via monitoring? And then how fast can they respond?

- Does your team ignore alerts?

- Do your teams tend to invent an approach to monitoring as they go along? Believe it or not, we actually see this a lot and it’s not a good thing. Be as thoughtful about monitoring and alerts as you are with other parts or your application.

- Do you keep a database of events so that you can later query for patterns?

User testing

Sitting users down in front of your application and try it out is a critical feedback loop. In an enterprise setting, we like to see two type of user testing: usability testing and user acceptance testing. Usability testing verifies that users find the application easy to use. User acceptance testing verifies that users can complete transactions with the application in a real-world setting. There can be fair bit of overlap between the two types.

If you do not do user testing, you will struggle getting users to accept frequent releases of new versions. Users will only like rapid changes if the experience remains usable, consistent, and effective.

We also want to call out that these feedback loops are manual processes that often require weeks or months of elapsed time. They are typically not modeled into the deployment pipeline, and that’s fine. But do not leave them both batched up until the end. That’s a long wait period and likely an unknown amount of rework before deploying to prod. If you do this, your process will feel more like waterfall than CD. Run these user tests early and often, while you are writing the code.

Exploratory testing

All this talk of automation does not mean your testers should retire their analytical thinking and learn to program. In fact, test automation should free up your testers to do what they’re best at: use their brains. Exploratory testing is when a tester is simultaneously learning about the system, designing tests, and executing tests. It is when a tester gets into a deep flow, not even knowing what the next test is. This is where a good tester can really shine, doing some of their most valuable work.

For most types of applications, a test strategy should include skilled testers performing exploratory testing. This testing will find problems, teach you about your system, and inform your automated regression suite. As with user testing, this testing should be done throughout the development process and not as a gate at the end.

Summary

This list of feedback loops organizations should have in place before doing CD is not exhaustive (e.g., we didn’t discuss A/B testing). We have presented the more common feedback loops we see where CD has been successful. Obviously every situation presents different problems and has different needs.

You don’t need to have high marks on everything we have presented to begin your journey to CD. But if you are feeling only so-so against a majority of them, we’d suggest working on the individual pieces before approaching CD. Once you get enough of them in place, you will find that you’ve actually completed a large swath of your journey to Continuous Delivery.

In future parts of this series, we plan to explore culture, the last mile, and more.