At PipelineConf this year, I spoke about my experiences with serverless architectures and how they play well with the patterns and practices of continuous delivery. This post explains what a serverless architecture is, their benefits and how they can enable continuous delivery.

What is serverless?

We’re in buzzword bingo land here so it’s important that we start with a common definition of what serverless is:

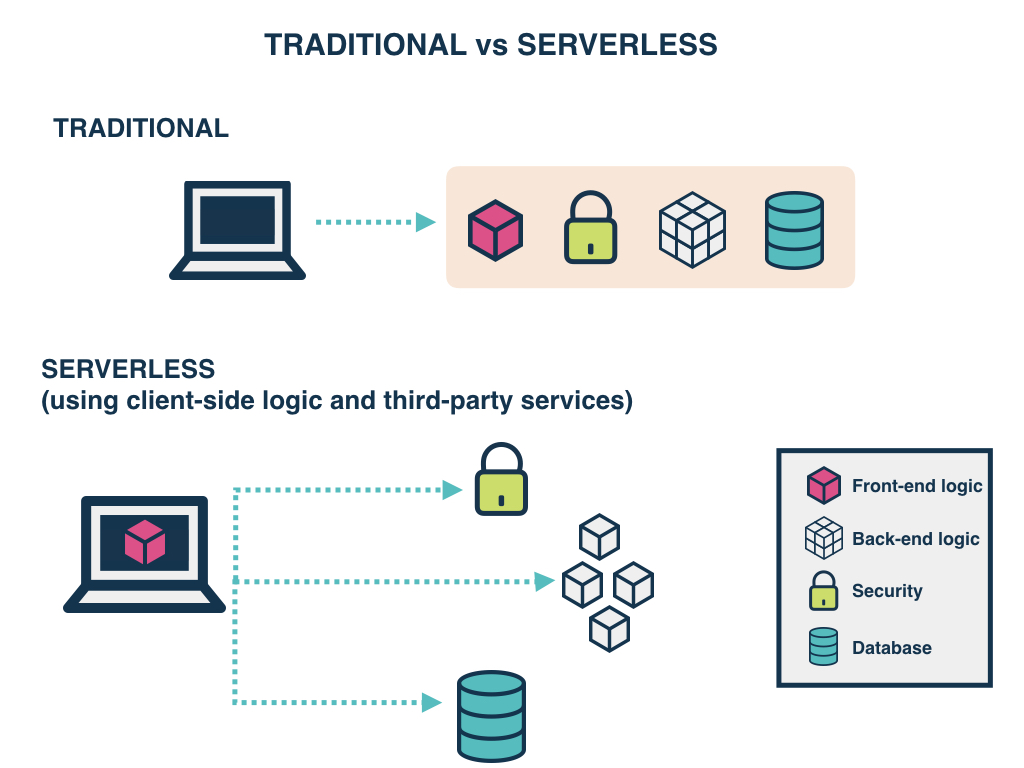

Serverless architectures are internet-based systems where the application development does not use the usual server process. Instead, they rely solely on a combination of third-party services, client-side logic and service-hosted remote procedure calls (Functions as a Service).

“Not the usual server process” means that your software isn’t running on a server that you have access to. You don’t own those servers. You can’t log in to them, even if you wanted to. They are abstracted away and managed for you by someone else, typically a cloud provider.

There are many immediate benefits to not managing your own servers:

- You don’t have to worry about them randomly rebooting or going down.

- You don’t end up with snowflake servers, where you don’t know quite what’s installed on them but they are mission-critical to your organisation.

- You’re not responsible for installing software on them. Even if you use configuration management tools such as Chef or Ansible to automate this, that’s still extra code you have to maintain over time.

By “third-party services”, I mean hosted databases like an AWS Elasticsearch cluster or Microsoft SQL Azure, or authentication services like Auth0 or AWS Cognito. You’re essentially outsourcing capabilities to specialised providers.

Client-side logic typically refers to rich client applications such as single-page web apps or native mobile apps.

Functions as a service

Let’s take a deeper look at Functions as a Service (FaaS) since they are newer, have significant differences to how we typically think about technical architecture, and have been driving a lot of the recent serverless hype.

Functions as a Service have six key properties:

Independent, server-side, logical functions

FaaS are much like the functions you’re used to writing in conventional programming languages; small, separate, units of logic that take input arguments, process them in some manner, then return the result.

Stateless

As you’re not in control of the underlying server infrastructure, you can’t save a file to disk on one run of your function and expect it to be there the next. Any two invocations of the same function could potentially run on completely different servers under the hood.

Ephemeral

FaaS are designed to spin up quickly, do their work and then shut down again.

Event-triggered

Although functions can be invoked directly, they are typically triggered by events from other cloud services, such as incoming HTTP requests, new database data or inbound message notifications. FaaS are often used and thought of as the glue between services in a cloud environment.

Scalable by default

This takes advantage of the FaaS properties mentioned above, allowing as many functions to be run (in parallel, if necessary) as needed to continually service all incoming event triggers.

Fully managed by a third party

Examples are AWS Lambda, Azure Functions, Auth0 Webtasks or Google Cloud Functions. Each offering typically supports a range of languages and runtimes e.g. Node.js, .NET Core, Java.

Four key ways that Serverless Architectures enable Continuous Delivery

1. Naturally small deployable units

You really can’t get a smaller unit of functionality than a function, and this forces you to think small from the outset. In Continuous Delivery we want to be deploying the smallest thing that adds value to our business. When you have all these small, granular functions that can be deployed independently, you can start to ask interesting questions such as “Do we need to deploy more than one at a time?”, “Could we just deploy that one function?”, “Does that deliver value on it’s own?”.

2. Simple deployment model

Thanks to the small size of deployment artifacts, in general, deployments are simple and quick. Deployment artifacts are typically idiomatic of the chosen runtime e.g. NuGet packages, npm packages, JAR files. This, coupled with the growth of open source FaaS tooling (such as the Serverless Application Framework and ClaudiaJS), make it simple to build an artifact and move it through a continuous delivery pipeline to production.

3. No more “works on my machine”

As you will recall, FaaS are fully managed by a third party. This means that in many cases you cannot test your application in the same way as a traditional application. What I have found useful is for each developer to have their own cloud-hosted sandbox. Developers will run a script that will deploy their functions and associated services (e.g. HTTP API endpoints) to that sandbox, then execute tests against the deployed system in situ. You might think, “oh, the feedback loop is going to be slow” but as deployments of FaaS are so quick this usually doesn’t cause too many problems.

And the fact that you can’t run tests locally forces you to push testing closer to production. Every time developers deploy to their local sandboxes, they are exercising the same deployment scripts that run as part of their main deployment pipeline. Therefore, you are getting continuous feedback on the effectiveness of your pipeline steps.

This production-like testing cycle, coupled with a greater focus on monitoring and operability, gives you much greater confidence in the quality of your system, and removes a lot of barriers that can typically be found on the path to continuous delivery.

4. Serverless enables focus on business value

It’s always worth remembering that our goal as software engineers is not to continuously deliver code to production, but to instead continuously deliver working software that provides value to our users. Serverless architectures really allow you to focus on your core competencies, while offloading capabilities you don’t want to care about.

For example, to implement log shipping and aggregation for a system, your team could spend a number of weeks developing and deploying a custom solution to managed servers (whether on premise or in the cloud). Even by choosing mature products, e.g. Splunk or the ELK stack, and implementing good practices such as Infrastructure as Code, you will suffer an initial implementation cost (in both time and money) as well as an ongoing penalty in terms of maintenance and operability. Alternatively, if your system was built using AWS Lambda and the Node.js runtime, then a simple console.log statement will send your logs to AWS Cloudwatch and store them for up to 15 months. This gets you a long way towards a sustainable solution for a much reduced set of upfront and ongoing costs.

This reasoning can be applied to many other examples. The key point here is that by using serverless architectures, you can get a lot of functionality “out of the box”. This allows you to focus on work that directly benefits your users and can give you a distinct advantage over your competitors who are having to spread their efforts over a greater technical surface area.

This article covered some of the key components of serverless architecture and focussed in particular on Functions as a Service (FaaS). We examined that serverless architectures can be great enablers of Continuous Delivery by combining a focus on value with small, quick-to-deploy, independent units of functionality. While of course there are downsides (which we have deliberately avoided discussing here), implementing a serverless architecture helps support these fundamentals of Continuous Delivery, and could well be a game changer for you and your business.

Robin Weston is a Lead Consultant at Thoughtworks. Robin has worked in technology for over 10 years and has been at Thoughtworks since 2014. He cares passionately about helping deliver value to clients through simple, effective software.